Databricks Tips, Tricks and Hacks

Welcome to our website.

| Tip | Description | |

|---|---|---|

|

|

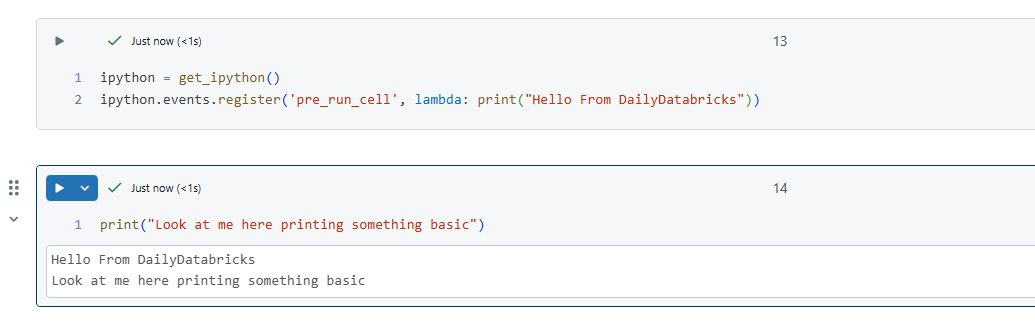

IPython Events | Learn how to implement smart logging, automated DataFrame validation, and cross-language monitoring in Databricks using IPython events. Perfect for data engineers looking to next level capabilities to their notebooks. |

|

|

Custom IPython Magics in Databricks | Unlock the power of custom IPython magics in Databricks to streamline your data engineering workflows. Learn how to create line and cell magics that simplify common tasks like checking cluster configurations, analyzing SQL execution plans, and monitoring notebook context. Perfect for data engineers and analysts looking to boost their productivity with practical, reusable code snippets |

|

|

Exploring Databricks Metadata with Spark Catalog and Unity Catalog | Learn how to leverage Spark Catalog APIs to programmatically explore and analyze the structure of your Databricks metadata. |

|

|

Unlocking the Power of Databricks Magic Commands | Discover the magic of Databricks notebooks with built-in commands that boost productivity and streamline your workflow. * Explore a variety of line and cell magics * Leverage commands for timing code execution * Utilize magics for logging and running shell scripts |

|

|

Easy Download Links from Databricks Notebooks using FileStore | Filestore is not actively recommended and considered legacy by Databricks |

|

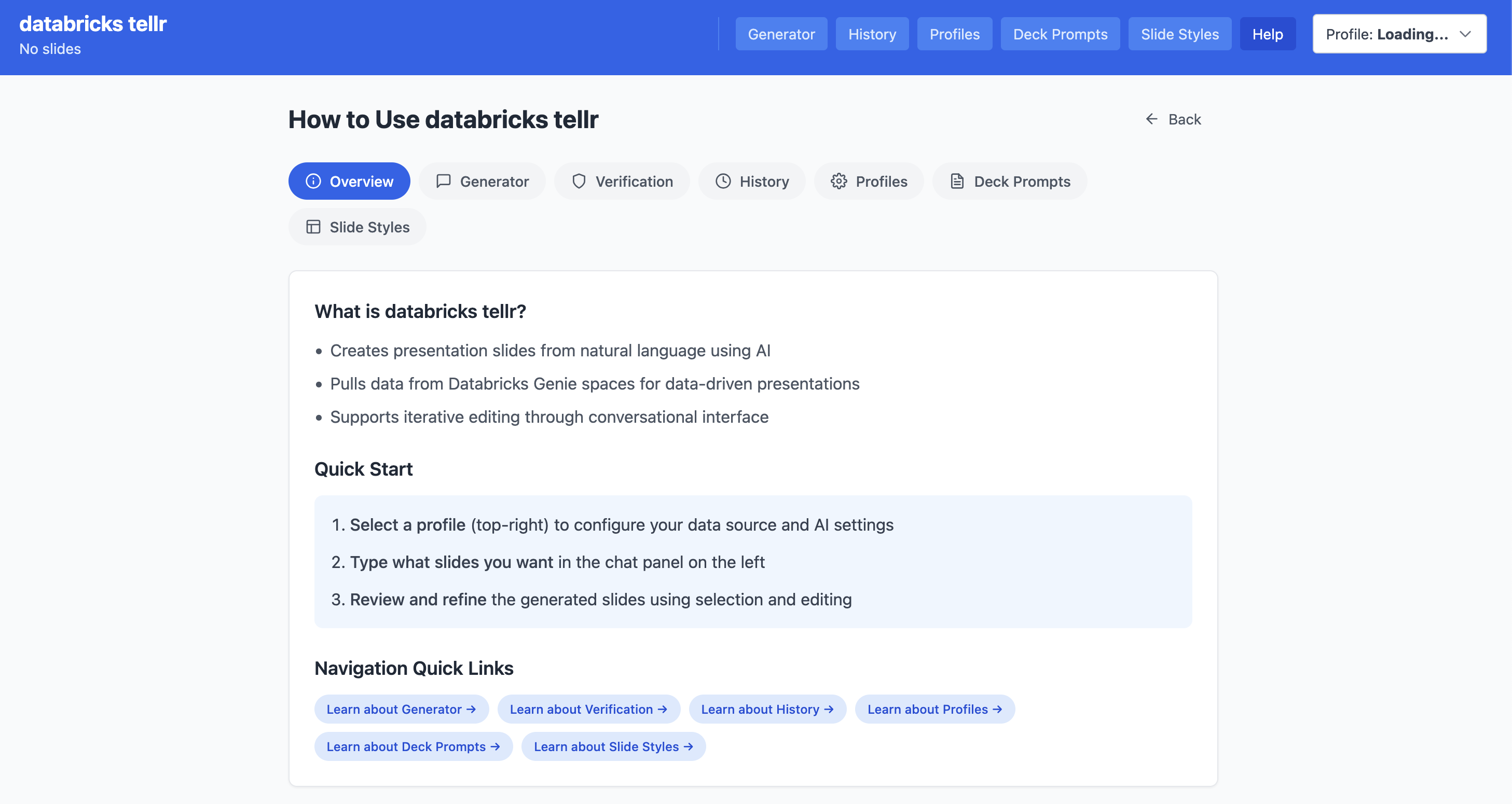

Getting Started with TellR: AI-Powered Slides from Databricks | Learn how to install and use TellR, an agentic Databricks App that turns your data into interactive slide decks through natural language. |

|

|

Reusable Window Functions | Learn how to define and reuse window specifications across multiple window functions using named windows in Databricks SQL and PySpark. |

|

|

Retrieving Notebook Bindings Dynamically | Learn how to retrieve run parameters. Understand the use of Databricks widgets and dynamically getting the notebook bindings for effective parameter handling. |

|

|

Loading Unit Test Cases Dynamically in a Notebook | Dynamically load Python test cases from a notebook module. Explore the benefits and the pitfalls of this approach in your testing strategy. |

|

Notebook Context | Learn about the Notebook Context Object and how you can leverage it to enhance your notebook logging and experience. |

|

|

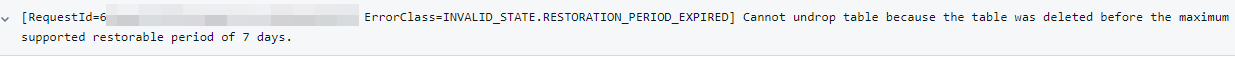

Undropping Managed Tables | Learn how to address the INVALID_STATE.RESTORATION_PERIOD_EXPIRED error in Databricks SQL when attempting to undrop a table within the 7-day restoration period. Discover techniques using SHOW TABLES DROPPED and table IDs to troubleshoot and resolve table restoration issues in your Unity Catalog environment. |

|

Efficient Performance Testing with Spark Write NOOP | Explore how to use Spark’s NOOP write format for efficient data processing testing and development. Understand how to implement it and its benefits in your Spark workflows. |

|

|

Databricks Azure Managed Identity | Handle edgecase access control by utilizing the user assigned Managed Identity in your Azure Databricks workspace. |

|

|

Enhancing Delta Tables with Custom Metadata Logging | Learn how to leverage Delta Lake custom metadata for better data lineage, governance, and pipeline observability. Includes practical examples and best practices. |

No matching items